AI human

transformations

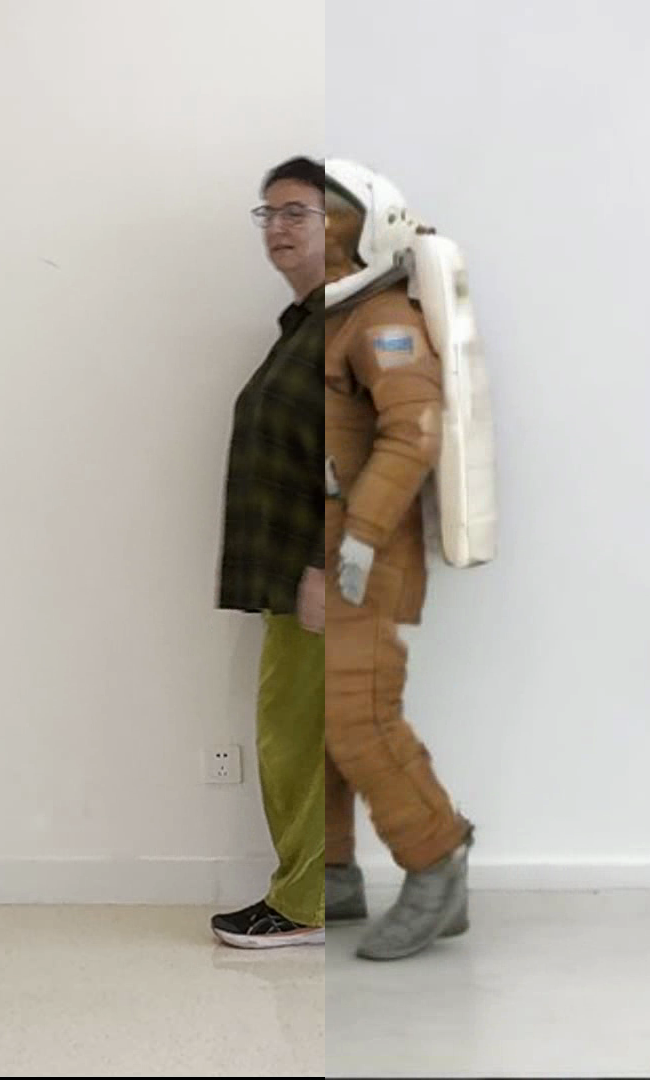

An interactive installation that uses image generation models in real time to transform part of the camera image into something else.

The project is using the a state-of-the-art generative model to tha takes the input from the camera in real-time and transforms the person into something from another world.

The themes of the transformation can be customised and they change every few seconds so that participants get a chance to play with many alter-egos.

A QR code allows participants to take away a digital memento, a recording of their interaction which is uploaded on a website for them to access.

The project was originally inspired by the game Exquisite Corpse, where players draw parts of a being without knowing what the other parts show.

We are using Stable Diffusion XL Turbo, which is quite fast, but not fast enough for a real-time installation. To achieve real-time rending we build a custom pipeline that uses multiple RTX4090 GPU cards in parallel that receive frames from the client and respond with frames rendered by Stable Diffusion.

Half man, half ape, our team-member Ben is setting up the interactive installation using a big LED wall in our lab.

Technology: Stable Diffusion XL Turbo // zmq messaging // fastAPI // NVidia RTX4090 // Pytorch

Credits:

Design / Production: Jesse Wolpert / Theo Papatheodorou

Technical implementation: Ben Schumacher